What is IT Infrastructure Orchestration

Orchestration is a concept related to automation, addressing the problem of how to coordinate large-scale IT systems. A lot of times, such systems have to meet agreed-upon service levels, integrate huge numbers of separate services, and provide complex services to hundreds of thousands of users — all without missing a beat.

In this post, we’ll look at the different types of orchestration, as well as check out what it looks like in action. First, why is orchestration not automation?

How does orchestration differ from automation?

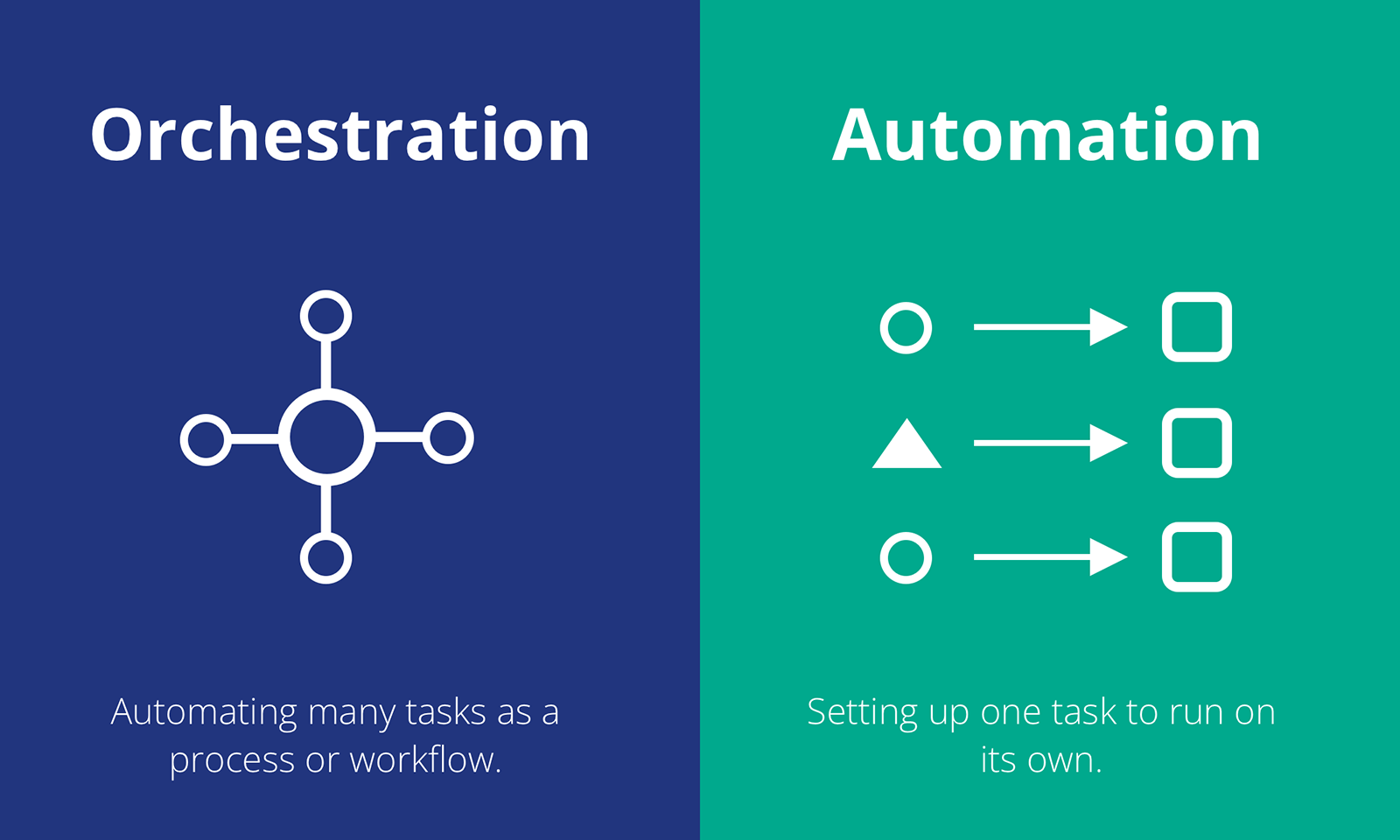

Orchestration and automation aren’t the same thing, but they are related. Automation is about taking a single repetitive, rules-based task, and making it run with zero or minimal human interference. That can shift a ton of work off the desks and calendars of real people, letting them focus on more valuable and productive tasks.

Orchestration is about deriving those same benefits at a wider, deeper scale, by setting up multi-task processes across multiple pieces of software to run with zero or minimal human intervention.

Steven Watts, writing in BMC Blogs, defined orchestration as ‘automation not of a single task but of an entire IT-driven process.’

Types of orchestration

Since IT systems are varied and complex, approaches to orchestration also differ. Here are some of the main methods for turning to-do lists into pipelines.

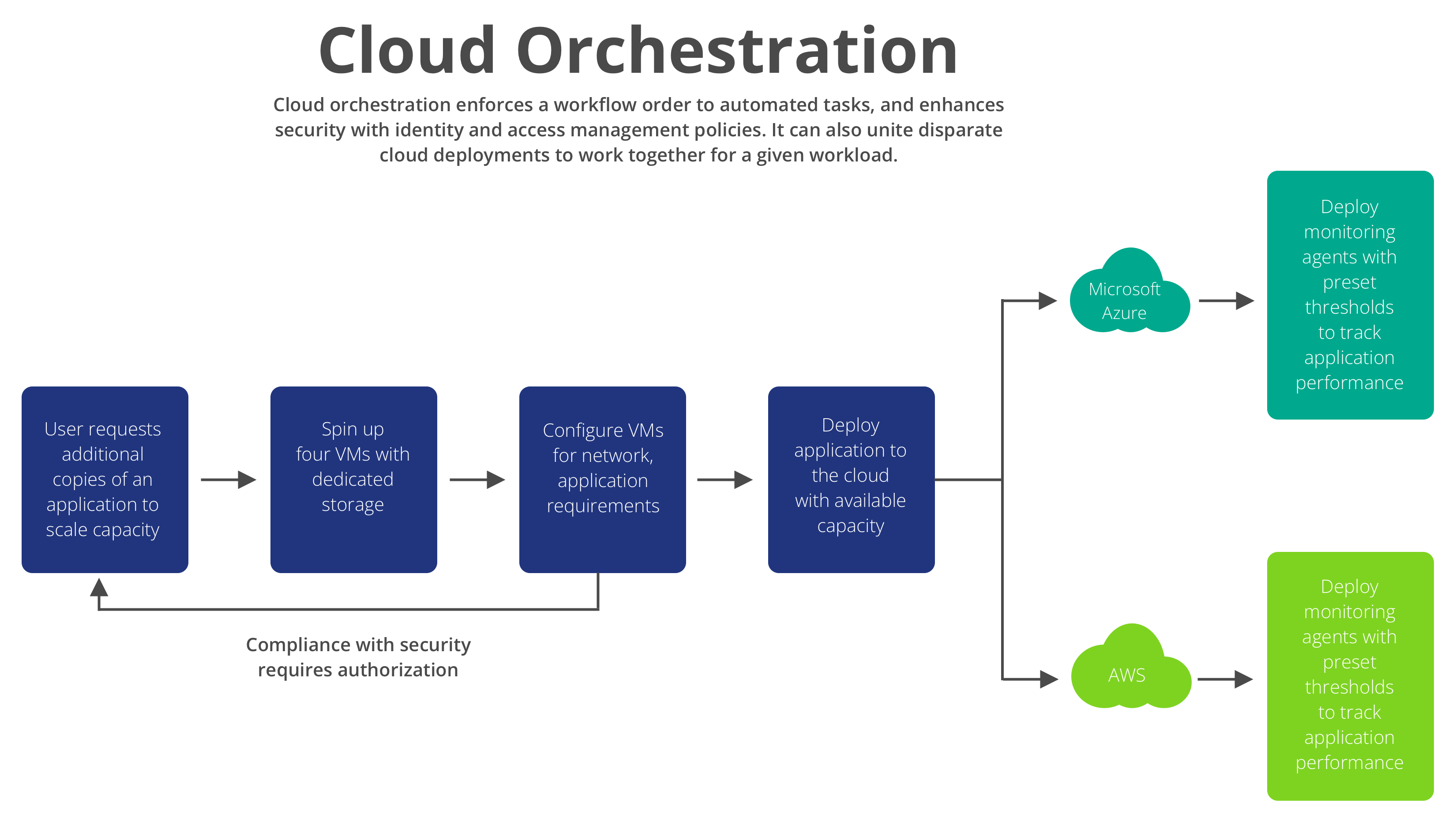

Cloud orchestration

Cloud orchestration is typically used to orchestrate predictable tasks in cloud-based IT systems, including provisioning, starting or decommissioning servers, allocating storage, configuring networks and granting applications access to cloud services like databases. These activities are multitask, multistage actions but they’re also relatively predictable; you’re creating an automated pipeline to cover outcomes that used to be achieved by sysadmins and directly, then later through provisioning tools like Chef and Puppet.

Application orchestration

Application orchestration means integrating two or more software applications such that they operate together. Sometimes this is to achieve something relatively simple, such as cross-application data sharing. Sometimes it’s more involved, as when two applications are orchestrated to perform a multistage process that involves both of them. If this sounds like a familiar job, that’s because without some degree of orchestration, you’d spend most of your time wrangling your stack rather than getting anything done. But most organizations don’t approach this through the lens of deliberate and conscious orchestration; they typically don’t use the word and aren’t clear on the concept, meaning they’re usually doing it ad hoc and in a less efficient way than they could be.

When it’s done right, application orchestration lets you monitor and manage your integrations centrally, adding capabilities for message routing, security, reliability and the capacity to transform and reorient the system as a system, rather than cat-herding a bunch of disparate tools. Typically this is achieved by decoupling the integration logic from the applications and managing it via a container.

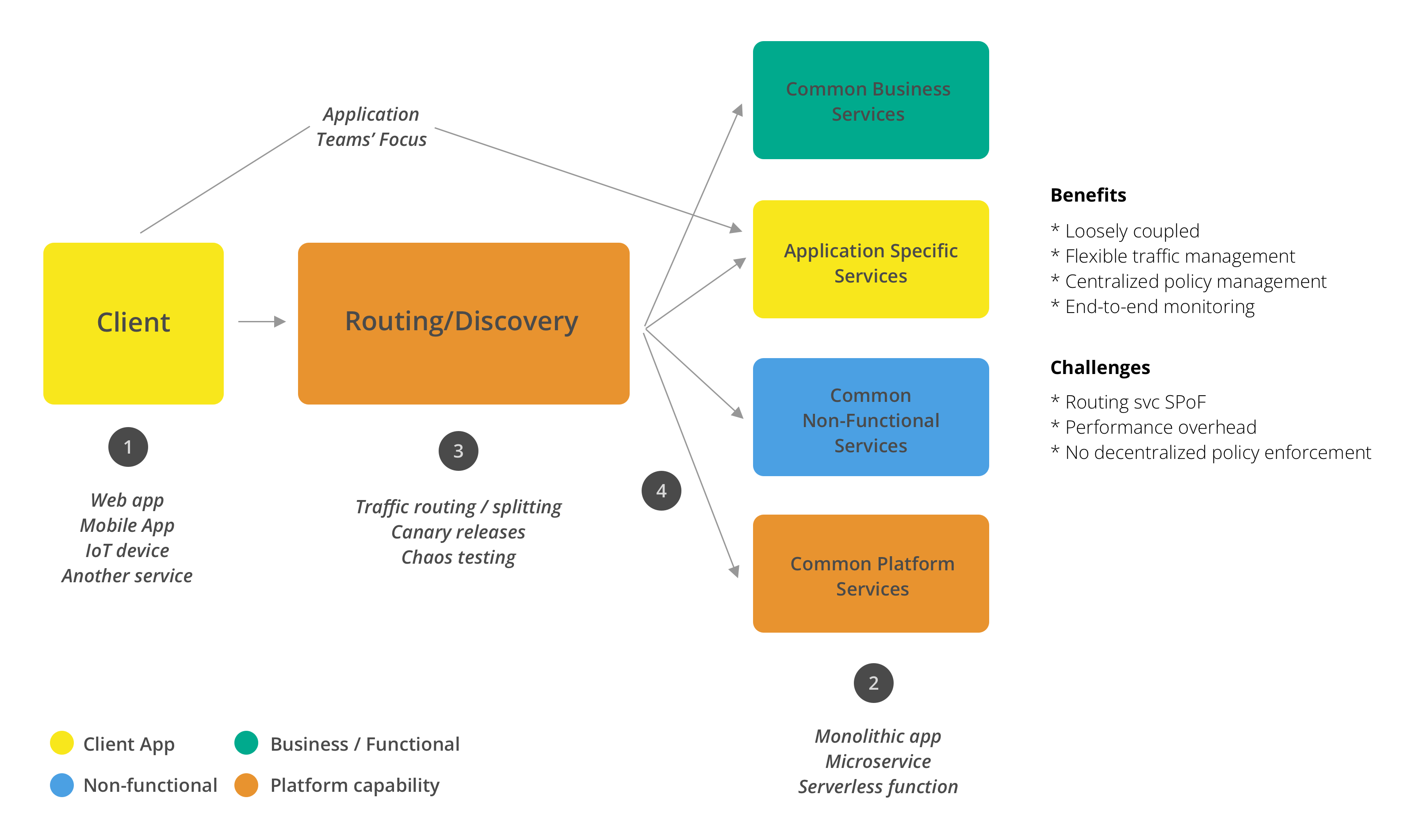

Service orchestration

Service orchestration works similarly to application orchestration, it just applies similar methods to microservices, networks and workflows rather than to more traditional ‘monolithic’ applications.

Typically, individual services lack the native capacity to be centrally coordinated, and they all have their own dependencies and requirements. Connecting them all without some centralized coordinating structure can be messy and ineffective, and as their number grows the mess you can create by plugging them all into each other wrong gets exponentially larger. Orchestrating them correctly, by contrast, lets you scale them as needed, tune them into business requirements, and avoid outages, failures and data loss.

Container orchestration

Container orchestration can form a part of both application and service orchestration. It’s the coordinated automation of container management, letting you use software to provision and deploy containers, allocate resources, monitor them, and control their interactions.

This approach is relevant whenever you’re deploying containers in large numbers, whether that’s to manage a microservice-oriented ‘cloud-native’ implementation, containerized third-party services, or applications.

Teams will often use orchestration tools like Docker or Kubernetes, which rely on a configuration file that tells them how to organize and arrange container images — the ‘DNA’ of your orchestrated container fleet. The tool will then use defined constraints like labels or metadata to automatically identify and assign hosts. You’re managing your container fleet, not by making decisions yourself in real time, but by making them in advance and supplying them to the tool as a series of if-this-then-that commands. This gives you the same advantages that computerized automation delivers to other sectors, but it’s taking place inside your IT implementation itself.

Workflow orchestration

Workflows consist of a series of interdependent ‘jobs to be done,’ with some forking depending on feedback, but a high degree of predictability. Orchestrating them means wrangling the workflow itself, the software and, if relevant, hardware involved, and then building a coherent structure to contain and direct it all. Individual workflows can be orchestrated and then fed into each other to allow organizations to orchestrate huge areas of productivity.

At first glance, this sounds a lot like a data pipeline — or like the kind of process you can construct using tools like IFTTT. And it is, to some extent. It differs in scale, range, and applicability. Workflow orchestration doesn’t just pipe data from one application to another. It triggers state changes in applications based on feedback from other applications, with reference to a canonical structure.

DevOps orchestration

DevOps is the combination of two disciplines, development and operations. It’s an effective approach that’s not widely utilized, but it’s still more siloed than it needs to be. In particular, development teams often have internal automation, and operations often do too. But remember, orchestration is about integrating the automation of individual tasks into whole processes.

DevOps orchestration can’t just be about merging several jobs into a larger script. Instead, it means building a specialized DevOps workflow, involving multiple automated tasks and stages, with appropriate resources to streamline the entire process.

To achieve all this, you’ll need an orchestration layer.

What is an orchestration layer?

Orchestration layers let you coordinate multiple APIs according to a centrally-determined plan. In effect, your orchestration layer functions as a ‘universal API’ which all your other applications plug into, and which makes multiple API calls to multiple different services in response to a single request.

Orchestration layers also manage data formatting between separate services, making different formats mutually intelligible and allowing different data streams to be split, merged, and routed. It assists with data transformation, server management, authentication, and integrating legacy systems.

Cloud-based orchestration layers manage interconnections between different cloud-based components and between the cloud and legacy or onsite IT implementations. Done right, it should turn your IT into a service-agnostic ‘plug-and-play‘ system.

What does orchestration look like on the ground?

These three companies moved to implement orchestration to solve business problems that would otherwise be intractable. In the process they saved themselves hundreds of millions of dollars, expanded their services for customers, and cemented their positions as leaders in their fields.

1: Pearson

Online learning platform Pearson relies on being able to serve a large and rapidly-growing user base. But they can’t do that with conventional IT structures. ‘To transform our infrastructure, we had to think beyond simply enabling automated provisioning,’ explains Chris Jackson, Pearson’s Director for Cloud Product Engineering.

Using Docker containers and Kubernetes for orchestration, Pearson experienced 15%–20% developer productivity savings and SLA service without outages even at peak demand — and even while peak demand grows. Provisioning activities that previously took up to nine months can now be completed in just a few minutes.

2: UPS

UPS delivers over 20 million packages a day to customers all over the globe. This presents a significant logistical challenge, which UPS attempted to address using ORION, or On-Road Integrated Optimization and Navigation, which analyses optimal routes between 250 million address points for over 191,000 drivers. ORION works pretty well: it saves UPS about 10 million gallons of gas, 100,000 metric tonnes of CO2, and about $300–$400 million a year.

UPS couldn’t do all this without container technology, which allows them to pipe data upstream in their system and predict issues before they arise: “Historically, we were reactive. We would collect information, then do analysis,” says Stacie Morgan, senior application development manager, UPS. “Now, we can see how packages flow through our network to help center supervisors predict volume and staffing needs, based on weather or other factors. Data helps us optimize our operations to increase customer satisfaction and profitability.”

3: Vanguard

Vanguard doesn’t deliver education or packages. It delivers financial services, which rely on a library of over 1,000 separate applications. Managing that level of complex, interrelated provisioning made the company look around for tools to structure its processes, especially given its plans to scale.

The company chose Amazon Elastic Container Service (ECS), allowing it to store its data and applications in the cloud with an adequate level of security.

‘By using AWS services, Vanguard seamlessly architected a system based on a consumption model that enhanced transparency, increased deployment frequency, and reduced costs, thus enabling the migration path for Vanguard microservices to run using Amazon ECS on AWS Fargate,’ says Brian Kiefer, head of the Amazon ECS and AWS Fargate solutions, Vanguard.

Understanding orchestration for your organization

It’s less important to use the right terminology than it is to recognize the commonalities that link disruptive technologies. You can expect to see them leverage APIs to deliver capabilities across vendors, platforms and domains; look out for low-code or code-optional setups that let the less-technical access these benefits; and most importantly, they’ll deliver solutions that focus not only on task automation but integrating and connecting the digital enterprise.

The eventual leaders in this field will not contribute to tool sprawl and stack overload. Instead, they’ll incorporate existing investments while increasing agility, productivity, and improving experiences.

At Pliant, we have introduced an ROI dashboard that helps businesses measure, and report on the value our solution delivers to their business. Unlike traditional platforms or manual coding, Pliant’s low-code modern interface includes millions of actions that empower day-one value. An enabled user can design, publish, and execute a workflow in less than an hour that previously required days, weeks, or months of effort with traditional approaches.